How To Build A Travel AI Assistant That Doesn't Hallucinate 🤖

The Future of Open-Source AI and Research

Welcome back to Vanishing Gradients! I’ve recently started this newsletter to track all things data science, ML, and AI, as well as to share what I’ve been working on. This is still an experiment, so please let me know what you’d like to see more (or less!) of as we continue this journey.

There’s a lot to dive into this week, from hands-on coding sessions to discussions about the future of AI research, so let’s jump right in! 🤖

HOW TO BUILD A TRAVEL AI ASSISTANT THAT DOESN’T HALLUCINATE 🤖

Last week, I did a live coding session with Alan Nichol, CTO and co-founder of Rasa. Alan and his team have been building conversational AI systems for Fortune 500 companies for over a decade, and they’re at the forefront of embedding LLMs into reliable software.

In our session, we explored how to combine the power of LLMs with business logic to create AI agents that don’t hallucinate and deliver consistent results.

In this clip, we dive into the key elements of building a travel assistant that can book flights, hotels, and more—without going off track.

Key takeaways include:

Designing with business logic: How to anchor LLMs in real-world tasks to prevent hallucinations.

Maintaining accuracy: The role of clear rules and validation in conversational AI.

Practical example: Building a travel assistant capable of handling various tasks, like booking flights, hotels, and excursions.

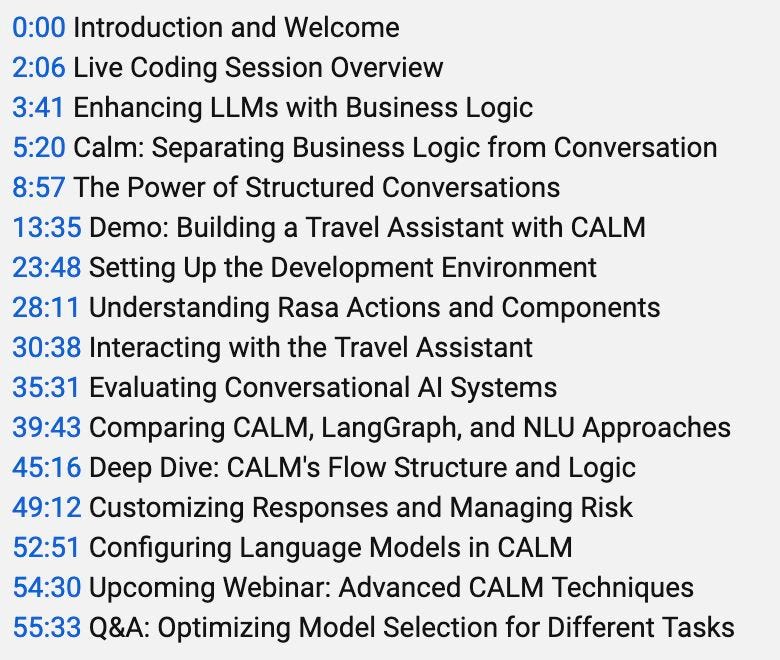

👉 Check out the full video here to learn more about creating reliable AI assistants that combine the best of LLMs and structured logic. These are the topics we covered:

Even better, jump into the github repo, spin up a codespace, and code along with us! 🤖

From Theory to Practice -- Machine Learning Engineering with Santiago Valdarrama

I recently had a great chat with Santiago Valdarrama about Machine Learning and AI Engineering—Santiago’s insights are spot on, and he shares real-world examples that bring clarity to complex ideas👌

Check out this two-minute clip of him talking about data drift:

📉 Data & model drift, and why monitoring is essential

📱 Changes in image quality from evolving smartphone cameras impacting model predictions

🔄 Practical steps for adapting models to real-world shifts in data

🧻 How COVID shifted demand forecasting models (hello, toilet paper 🧻)

These are the topics we covered and you can watch the rest of the fireside chat here:

The Future of Open-Source AI and Research Infrastructure at Eleuther AI

Later this month, I’m thrilled to be hosting a fireside chat with Hailey Schoelkopf, a research scientist at Eleuther AI. Among many other things, Hailey maintains the LM Evaluation Harness, the backbone of 🤗 Hugging Face’s Open LLM Leaderboard. Her work has been used in hundreds of research papers and by organizations like NVIDIA, Cohere, and Mosaic ML.

Eleuther AI started as a discord server for chatting about GPT-3 and rapidly became a leading non-profit research institute focused on large-scale artificial intelligence research. As part of their mission, they seek to:

advance research on interpretability and alignment of foundation models;

ensure that the ability to study foundation models is not restricted to a handful of companies;

educate people about the capabilities, limitations, and risks associated with these technologies.

👉 Sign up here to join the chat, or check out a previous talk with Hailey where we took A Deep Dive into LLM Evaluations:

⚡️ MASTERING AI PLATFORMS: INSIDE UBER’S MACHINE LEARNING ENGINE WITH MIN CAI

Last time in Data Dialogues, we explored AI leadership and discussed how to balance strategic following vs. chasing trends, as well as the importance of judgment in decision-making (see here for more). Now, we’re shifting gears to talk about Building AI & ML Platforms with Min Cai, who has spent the last decade leading Uber’s machine learning platform.

Key takeaways from our last session included:

Encouraging experimentation: Empowering individual contributors to tinker with tools like GenAI.

The rise of the PM skillset: How AI is shifting data scientists toward more product-focused roles.

Leadership fundamentals in AI: Why clear communication and setting realistic goals remain crucial as AI grows more complex.

This is a rare chance to learn from Min’s deep experience building scalable AI systems.

BUT WAIT… THERE’S MORE! 🚀

I have a bunch of other free online events coming up that I’m excited to share with you 🤗

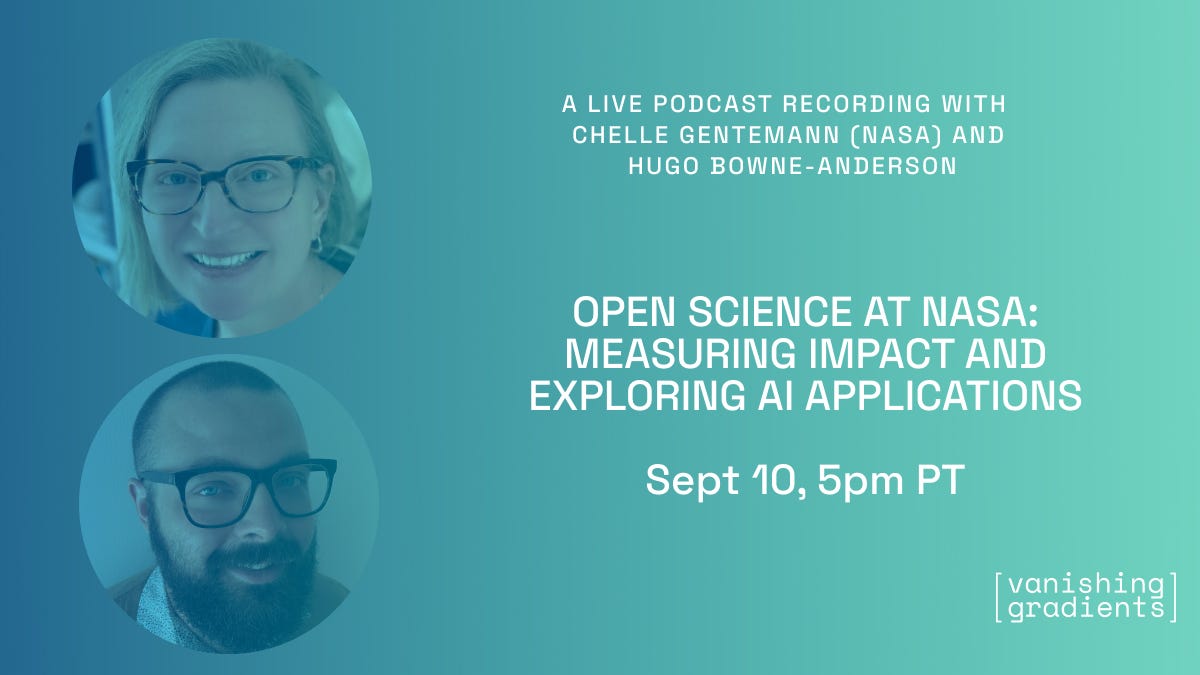

OPEN SCIENCE AT NASA: MEASURING IMPACT AND EXPLORING AI APPLICATIONS

This week, I’m livestreaming a Vanishing Gradients podcast recording with Chelle Gentemann, Open Science Program Scientist for NASA’s Office of the Chief Science Data Officer! We'll discuss:

🐭 What AI can tell us about RATS IN SPACE

🔍 Measuring Open Science Impact

🤖 AI in NASA Science

🌍 Collaborative Scientific Discovery

🚀 Challenges of Open Science in Government

🔭 The Future of Space Research

You can register for free here.

AI MEETS SEARCH WITH PACO NATHAN

This week, I’m also excited to host a live event as part of O’Reilly’s What’s New in AI series! I’ll be joined by Paco Nathan, a pioneer in machine learning and AI with decades of experience. We’ll dive into the intersection of AI and Search, discussing the rapidly changing landscape of information retrieval.

Why this is worth tuning in? Paco has been at the forefront of recommender systems, chatbots, and search technology since the 90s and continues to push the boundaries. We’ll explore his latest work with GraphRAG, multimodal embeddings, and his cutting-edge projects like tracking illegal fishing fleets and counterterrorism efforts through AI.

You can register here. Note: you need to be signed up to O’Reilly’s online platform to register but there’s 10-day free trial.

PROMPT ENGINEERING, SECURITY IN GENERATIVE AI, AND THE FUTURE OF AI RESEARCH

In a few weeks, I’ll be hosting a panel for Vanishing Gradients with Sander Schulhoff (University of Maryland, Learn Prompting), Denis Peskoff (Princeton University), and Philip Resnik (University of Maryland), discussing their recent work on The Prompt Report, a groundbreaking 76-page survey analyzing over 1,500 prompting papers. We’ll cover their novel taxonomy of prompting techniques, security concerns in generative AI, and where the field is heading next.

What to expect:

📜 The Evolution of Prompt Engineering: Where it fits alongside fine-tuning and RAG in shaping generative AI outputs.

🧠 A Taxonomy of Prompting Techniques: Exploring the six-part categorization, including cutting-edge approaches like chain-of-thought and self-criticism prompting.

🚨 Security in Generative AI: Discussing vulnerabilities such as prompt injections and how to secure AI models against potential exploits.

🌟 Future of AI: Speculations on how prompt engineering could influence areas like robotics, AR, and VR, pushing AI into exciting new frontiers.

👉 Join the panel discussion here.

I’ll be announcing more livestreams, events, and podcasts soon, so subscribe to the Vanishing Gradients lu.ma calendar to stay up to date. Also subscribe to our YouTube channel, where we livestream, if that’s your thing!

That’s it for now. Please let me know what you’d like to hear more of, what you’d like to hear less of, and any other ways I can make this newsletter more relevant for you,

Hugo