Is Data Science Dead in the Age of AI?

🎓 Build Robust AI Systems—Scholarship Applications Now Open!

Welcome back to Vanishing Gradients! This newsletter is where I explore developments in data science, ML, and AI while sharing what I've been working on. Whether it's practical tools, technical deep dives, or practical discussions about the space, my goal is to provide you with actionable and thoughtful content.

Here's what's coming up and what I've been working on recently:

A conversation with Hilary Mason on why data science isn't dead but evolving in the age of AI;

A free Maven lightning lesson on testing LLM applications, where Stefan Krawczyk and I will show you how to escape "demo hell";

Scholarship applications now open for our comprehensive GenAI course ($800 value);

A live session with Charles Frye exploring GPU fundamentals for AI developers.

📖 Reading time: 5 minutes

Let's delve dive into the details.

Is Data Science Dead in the Age of AI?

In the latest episode of High Signal, Hilary Mason emphatically answers: “No!” But while the field isn’t dying, it’s certainly evolving. Automation, generative AI, and a maturing community are redefining what data science means today.

💡 Fun fact: Hilary was the guest on the first podcast I ever released nearly a decade ago. It’s fascinating to reflect on how much has changed—and how much hasn’t.

Key Themes We Covered:

✨ From Hype to Maturity

Automation is transforming workflows and reducing the need for some entry-level roles. We discuss what this shift means for the future of the field.

📜 Why Generative AI Needs Context, Not Prompts

Hilary shared a thought-provoking analogy: “Prompting feels like spellcasting, not engineering.” Instead, she advocates for designing systems with rich context—leveraging structured data and multimodal inputs to unlock generative AI’s full potential.

⏳ A Decade of Interfaces, Not Algorithms

Even if machine learning innovation paused today, we’d still have years of work ahead to create the workflows, tools, and interfaces required to maximize its power.

👉 Listen to the full episode here (or on your app of choice) and let me know: What do you see as the future of data science in the age of AI?

Why Testing LLM-Powered Apps Matters

LLMs are powerful, but they’re also unpredictable. Variability in outputs can wreak havoc on real-world applications if left unchecked.

For example, when extracting structured data from Stefan Krawczyk’s LinkedIn page above, the Current Role field changed across multiple iterations of the same input. This kind of “flip-floppy” behavior highlights why systematic testing and evaluation are critical for building robust LLM-powered apps.

Join Our Free Maven Lightning Lesson

✅ Writing pytest Cases for LLM Outputs

Discover how to create test cases that evaluate variability and catch failure modes early.

✅ Identifying and Addressing Failure Modes

We’ll explore common pitfalls in LLM behavior and strategies for mitigating them.

✅ Systematic Evaluation for Reliability

Learn how to measure and improve performance iteratively for production-ready systems.

📅 When: Monday, Dec 16, 4:00 PM PST

🌐 Where: Virtual (Zoom)💡

Why It Matters: Build confidence in your LLM-powered apps while avoiding “demo hell.”

LLMs may be unpredictable, but with the right tools and techniques, you can transform this chaos into engineering discipline. 🛠️

🎓 Build Robust AI Systems—Scholarship Applications Now Open!

Are you ready to take your generative AI skills to the next level? We’re offering scholarships for our upcoming course, Building LLM Applications for Data Scientists and Software Engineers, hosted on Maven (starts Jan 6, 2025!).

🚀🌟 What You’ll Gain

This scholarship provides free access (valued at $800) to a four-week, live cohort-based course where you’ll:

Design and deploy production-ready AI systems that scale.

Master prompt engineering and handle structured outputs with confidence.

Debug, monitor, and optimize LLM applications for reliability.

Build a portfolio-worthy GenAI/LLM app to query PDFs.

This course is more than just learning—it’s about building expertise to solve real-world problems at scale.

👩💻 Who Should Apply

This course is designed for:

Data scientists and ML engineers eager to deploy reliable LLM systems.

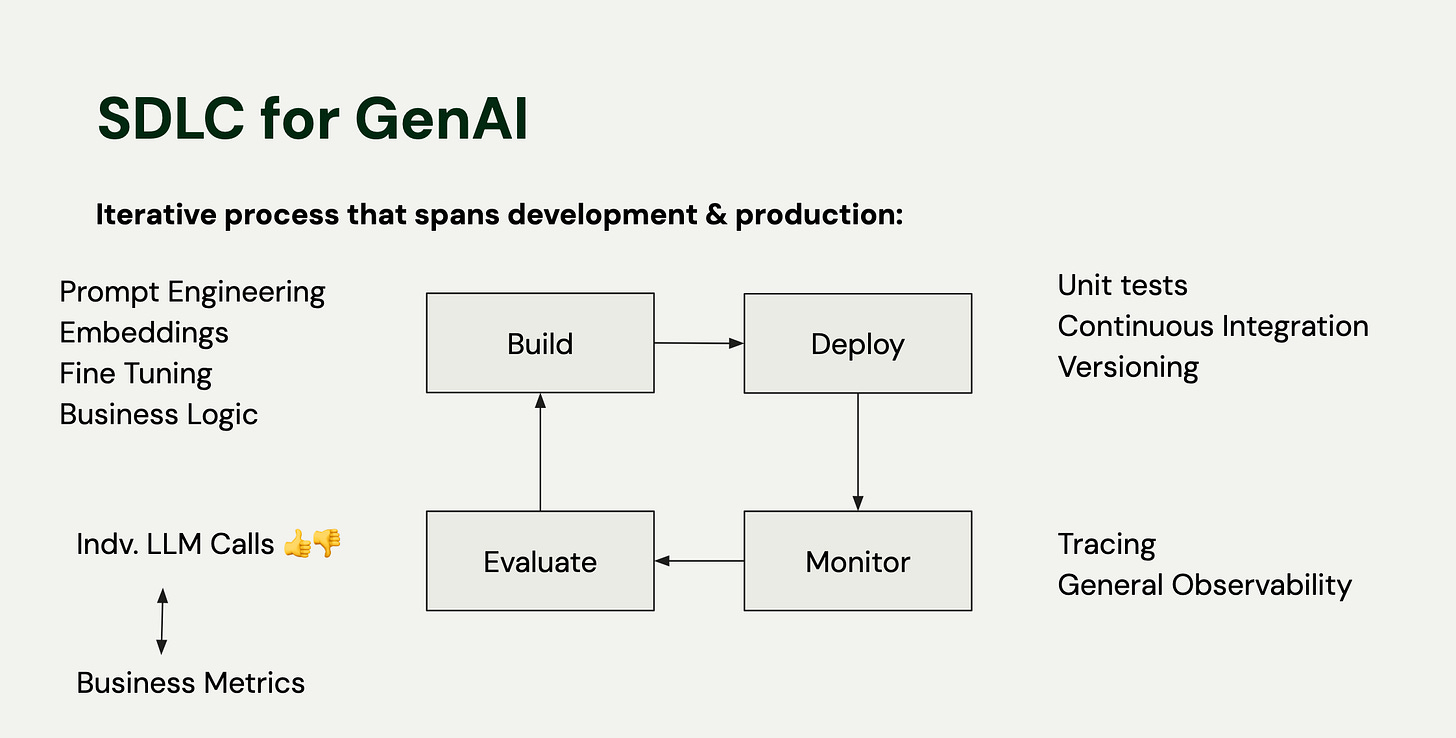

Software engineers ready to master the generative AI development lifecycle.

📚 About the Course

Led by Stefan Krawczyk (ex-Stitch Fix) and me, this course draws on our 20+ years of experience building scalable data, ML, and AI systems at companies like Stitch Fix, LinkedIn, and Outerbounds.

Expect live workshops, hands-on projects, and practical lessons that translate directly into results.

⏳ Application Deadline: Friday, Dec 20

Don’t miss this opportunity to level up your skills and build systems that work.

Check out the course details: Building LLM Applications for Data Scientists and Software Engineers.

🎛️ What Every Data Scientist and LLM Developer Needs to Know About GPUs

GPUs are at the heart of AI development, but their complexity can be daunting for developers. That’s why Charles Frye and his team at Modal just launched the GPU Glossary—a resource that demystifies GPU hardware and software for developers.

To celebrate, we’re hosting a live podcast recording to explore the critical role of GPUs in AI!

📅 Event Details

When: Thursday, December 19th, 1:30 PM - 3:00 PM PST

Where: Virtual (YouTube)

What You’ll Learn:

💡 Why GPUs Are Essential for LLMs

Understand the hardware features that make GPUs the backbone of AI workloads.

📂 GPU Internals, Simplified

Key concepts like CUDA, Streaming Multiprocessors (SMs), and memory hierarchies explained clearly.

🛠️ Practical Advice for Developers

When and how to use GPUs effectively in your LLM workflows.

🚀 Scaling AI Workloads

How to build pipelines that maximize performance on GPU hardware.

About Charles Frye

Charles is a Developer Advocate at Modal, a teacher in the Full Stack Deep Learning community, and a former Weights & Biases team member. With a PhD in Computational Neuroscience from UC Berkeley, he brings deep expertise in neural networks, Bayesian methods, and programming for AI systems.

If you’re a data scientist, LLM developer, or AI engineer, this event is for you. Don’t miss the chance to learn how to leverage GPUs to power your projects!

Let’s dive into GPUs, AI, and more—I hope to see you there!

I’ll be announcing more livestreams, events, and podcasts soon, so subscribe to the Vanishing Gradients lu.ma calendar to stay up to date. Also subscribe to our YouTube channel, where we livestream, if that’s your thing!

That’s it for now. Please let me know what you’d like to hear more of, what you’d like to hear less of, and any other ways I can make this newsletter more relevant for you,

Hugo