Master LLM Application Development: Course & Free Resources

Free 30-min AI/ML Consultation with me – or grab the free resources below!

Hey everyone,

If you’re a software engineer or data scientist, chances are you’ve built (or seen) a generative AI proof of concept (POC). It’s exciting at first—until it breaks down under real-world conditions. Most AI demos look impressive but rarely make it to production without the right development cycle.

That’s why Stefan Krawczyk and I are running Building LLM Applications for Data Scientists and Software Engineers—a course designed to help you move beyond POC purgatory and build production-ready AI systems.

👉 Sign up to the course in the next 48 hours to:

Get 25% off the full course (use code VG25) and

Claim a free 30-minute consultation with me—get personalized feedback on your GenAI project, whether refining prompts or scaling production pipelines.

The course starts in 2 days, and we’ve already got a thriving Discord community with engineers from Meta, Deloitte, Netflix, Salesforce, Atlassian, and the U.S. Air Force.

One participant is applying these skills to enhance poverty targeting models for developing countries, while others are working on diffusion models, GenAI products, and high-impact AI research.

Once the course kicks off, this becomes a closed space of peers actively building the next wave of LLM-powered applications.

👉 This email is about the course, but I know not everyone can commit right now. That’s why I’ve included free resources at the end of this email to help you get started and keep learning. Let me know if you find them useful!

What You’ll Learn (Course Overview):

From POC to Production – Break out of demo purgatory and build scalable, reliable LLM apps.

Prompt Engineering & Structured Outputs – Master techniques to get consistent results and handle complex tasks like function calling.

Monitoring, Debugging, and Observability – Develop workflows to catch and resolve issues in production.

Build & Iterate Faster – Learn how to create feedback loops, refine prompts, and continuously improve your models.

Real Projects, Real Apps – Walk away with production-grade LLM apps, including a PDF querying app and agent workflows.

These aren’t just theoretical exercises—you’ll get hands-on projects, direct feedback, and live interaction throughout the course.

Guest Lectures in the course:

Learn directly from engineers and leaders who have scaled LLMs at Airbnb, Google, and GitHub.

Sander Schulhoff (CEO, LearnPrompting.org) – Prompt Engineering in the LLM SDLC

Charles Frye (Developer Advocate, Modal) – Hardware for LLM Developers

Ravin Kumar (Google Labs) – End-to-End LLM Product Development

Aaaaaand we have two more new guest speakers!

Swyx (Shawn Wang): Engineering AI Agents in 2025💫

I'm excited to announce that Swyx (Shawn Wang) will be speaking on “Engineering AI Agents in 2025.” Swyx is an expert in building AI agents and his insights will be invaluable to those looking to build autonomous AI systems.

👉 If you’re unfamiliar with Swyx, here’s a quick rundown:

Co-host of the Latent Space podcast

Organizer of ai.engineer conferences (with AIE NYC 2025 coming up)

Builder of smol.ai, a platform with 30k daily users, 99% automated by AI agents

Swyx’s session will dive deep into the future of AI agents—and how to build them effectively for 2025 and beyond.

Hamel Husain: Data Literacy for Debugging and Evaluating LLMs💡

Hamel Husain (AI Engineer at Parlanse Labs, ex-Airbnb, ex-Github) will be leading a session on data literacy—a critical skill for debugging LLMs. His session will teach you how to look at your data with fresh eyes, enabling you to make smarter decisions when debugging and improving LLM applications.

Hamel explains that to troubleshoot effectively, you need a "physical connection" to your data—getting your hands dirty and really exploring what’s happening. This approach, central to debugging, helps you prioritize efforts and break through plateaus when LLMs aren’t performing as expected.

If you’re interested, here’s a clip from a chat I had with Hamel yesterday about these issues:

Bonus Offers (For Full Course Participants):

$1,000 in Modal credits – Power your AI apps with $1,000 in cloud compute—deploy models faster without worrying about infrastructure costs.

3 months of Learn Prompting Plus (valued at $117) – Master advanced prompting techniques and image generation.

👉 Sign up today, use code VG25 for 25% off, and also claim a free 30 minute consultation with me 🤗

Free Resources:

If now’s not the right time to join the course, that’s okay. Here’s a selection of free resources to help you build reliable LLM applications:

📚 Building LLM Apps: Essential Resources for Data Scientists and Software Engineers - A curated list covering Python, deep learning, MLOps, evaluation, and prompt engineering.

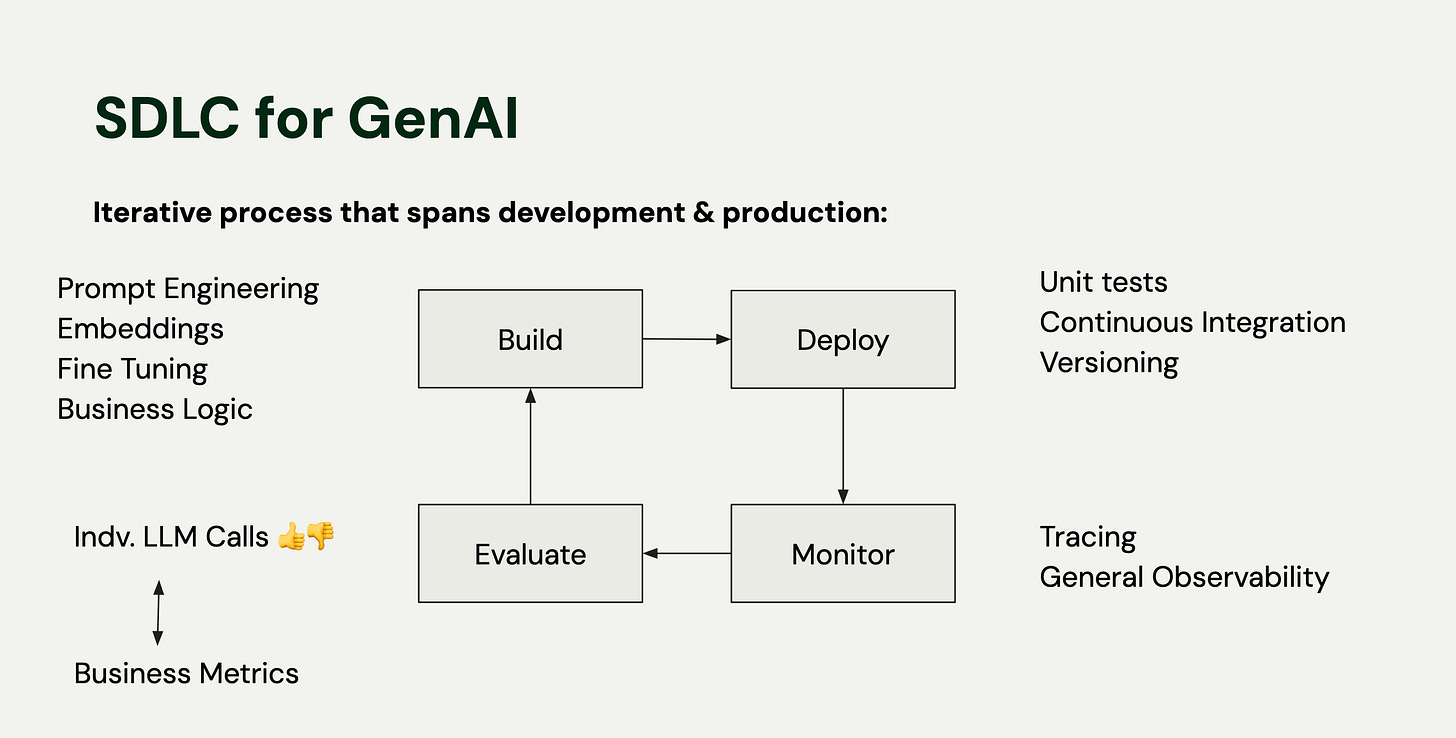

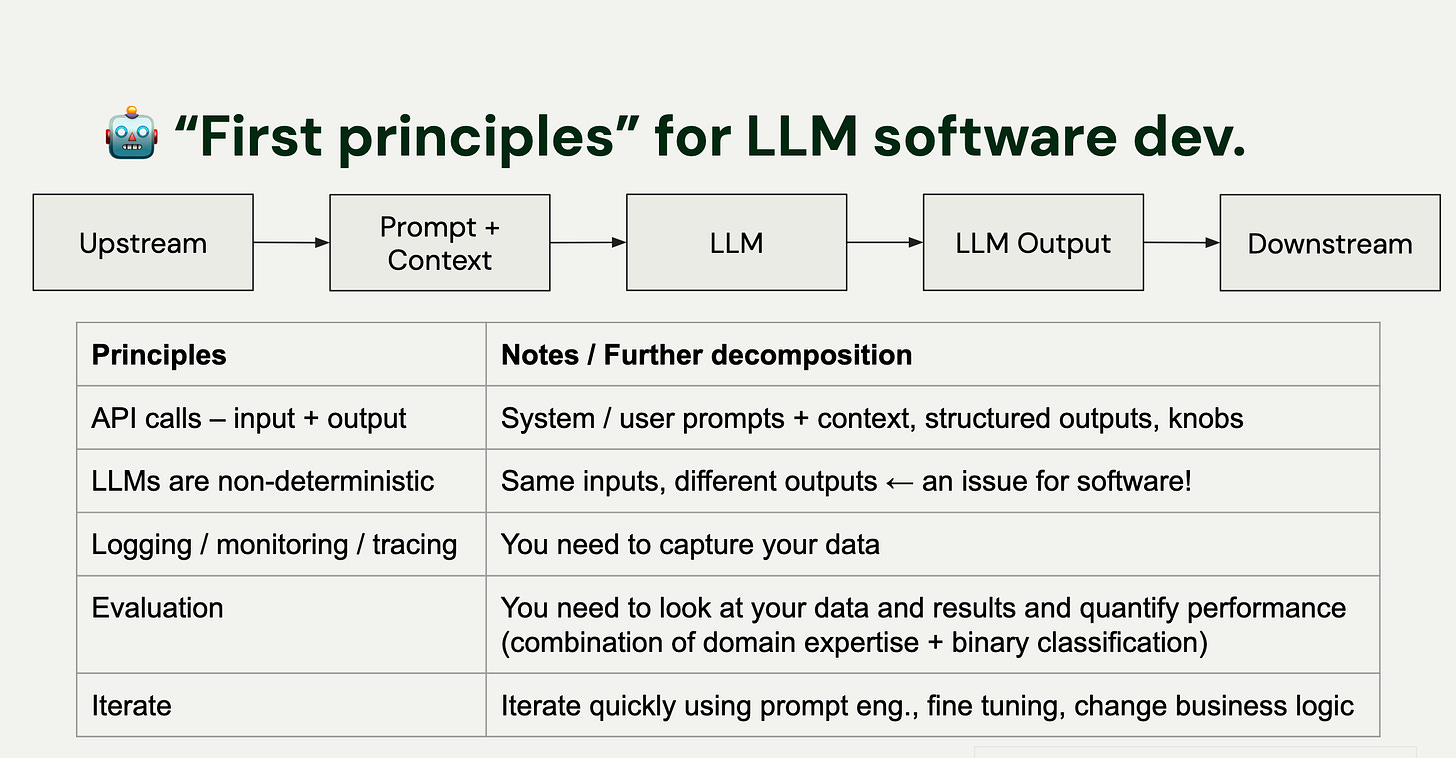

🔧 Cheat Sheet: Building Reliable LLM Apps - A concise breakdown of the SDLC for LLM apps—covering design, iteration, and observability.

🗂️ Vector Tools: From np.array to Vector DBs - Wondering when to switch to a Vector DB? This resource outlines the tipping points from simple arrays to production-grade vector indices.

⚡ Free Lightning Lessons (Watch Now):

Building with GenAI from First Principles – Learn to approach GenAI app development systematically.

Mastering LLM Application Testing – Build testing workflows to improve reliability and performance.

👉 These resources are a great starting point, but the full course provides the hands-on projects, live feedback, and community interaction needed to take things further.

Over 1,000 engineers have already joined our lightning lessons leading up to this course. Here’s what some of them had to say:

“The lightning lessons alone were worth it—excited to go deeper in the full course.”

“This course feels like the real deal. It’s solving problems no other resources are addressing.”

If scaling LLMs feels chaotic, this is your chance to streamline your workflow and learn from industry leaders.

Seats are limited, and the course begins in 2 days. Don’t miss your opportunity to join a cohort of like-minded professionals—lock in your spot today.

Best,

Hugo