The Post-Coding Era: What Happens When AI Writes the System?

What it takes to train SOTA model capabilities as a product lab

Nicholas Moy, former Head of Research at Windsurf (formerly Codeium) now at Google DeepMind, recently joined the High Signal podcast to discuss the rapid evolution of AI-powered software engineering.

We recently caught up with Nicholas Moy, former Head of Research at Windsurf and now at Google DeepMind, to talk about the rapid evolution of AI in software engineering and what it means for builders to move from “Co-driving” to “Agentic” development in real time.

We decided to write a post for those who do not have 60 minutes to listen to the entire podcast. It captures some of our favourite parts of the conversation, including

How to design systems that scale when technology becomes obsolete every six months;

Why 2025 was not the “year of the agent” but 2026 and beyond is the “age of the agent”;

What it takes to train SOTA model capabilities as a product lab;

What software development looks like when agents are better at coding than we are.

Find the full episode on Spotify, Apple Podcasts, and YouTube, or the full show notes here.

You can also interact directly with the transcript here in NotebookLM: If you do so, let us know anything you find in the comments!

Ship Today, Disrupt Yourself Tomorrow

Windsurf’s story is worth studying here. They prototyped agents before agents worked (Nick even built the first multi-step coding agent!), shipped what was useful today, and were ready to move when the models caught up. Nick recounted their early days, prototyping an agentic coding assistant long before “agentic” was a common term.

These early versions, built on models like GPT-3.5, consistently failed due to compounding errors. The team made a strategic decision to ship a product that was useful today, a high-quality autocomplete feature, while keeping their long-term agentic vision on the back burner.

The turning point came with the release of a more capable model. This illustrates a critical lesson for builders in the AI space: a product that is non-viable today might become a category leader tomorrow, simply because a new model checkpoint was released.

This reality collapses traditional product cycles and companies have be prepared to cannibalize their own successful products.

The key, Nick argues, is to define your company’s identity not by its current product (e.g., “the tab autocomplete company”) but by its ultimate mission (”the AI-powered software generation company”).

How To Build What Lasts When the Ground Shifts Every Six Months

One of the biggest engineering challenges today is writing software that remains valuable as AI models become exponentially more capable every few months. How do you avoid building complex systems that a new model renders obsolete overnight?

Nick’s approach is to focus on invariants: the fundamental, unchanging aspects of a problem. He suggests identifying the core information inputs and output forms that will always be necessary to complete a task, regardless of the AI’s intelligence.

Information Invariants: What data is fundamentally required to solve this problem? This will remain true even if the model gets 100x smarter.

Output Invariants: What form must the final work product take to be useful in its target industry or system?

By architecting systems around these stable pillars, you can treat the model-specific logic and “agent harness“ as a temporary layer that you should be prepared to throw away. This discipline allowed Windsurf’s core agentic framework to remain largely unchanged for 18 months, even as they swapped out underlying models and the system’s capabilities grew dramatically. The risk of not aggressively simplifying is that your existing harness can handicap a new, more capable model, preventing you from realizing its full potential.

In Nick’s words, “we should just unleash [the model], unfetter it, and let it flex its wings!”

The Decade of the AI Agent: From Co-Driving to Autonomous Agents

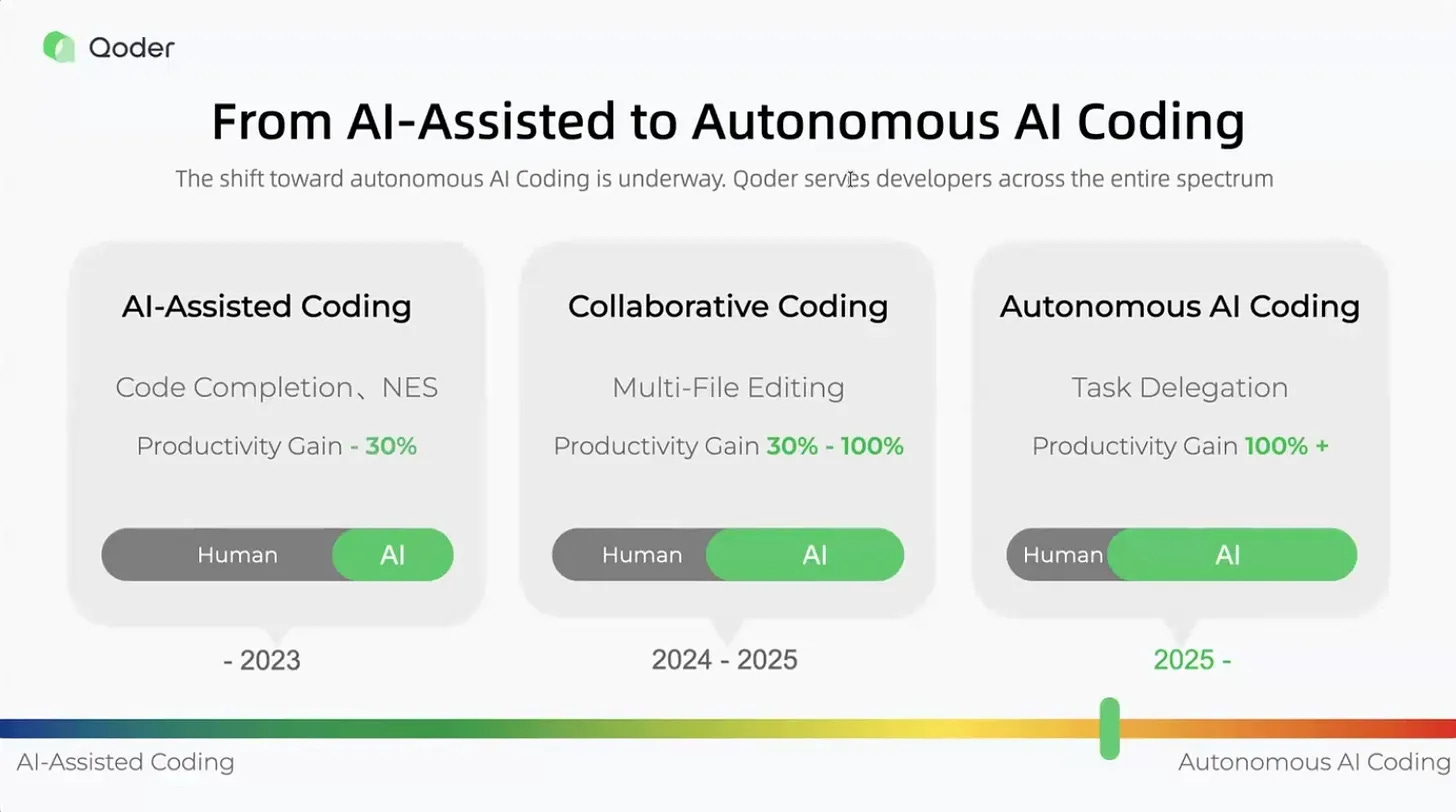

The paradigm for human-AI collaboration in software is undergoing a fundamental shift. Nick frames this as the move from “co-driving” to a truly “agentic” workflow.

Co-Driving: The human is in the driver’s seat, with the AI acting as a real-time assistant (like autocomplete or a chat window). The developer constantly supervises the AI’s output, making course corrections every few seconds or minutes.

Agentic: The human assigns a complete task or project to an AI agent and walks away. The developer’s role shifts to reviewing the finished work, much like reviewing a pull request from a human colleague.

The primary barrier to the agentic paradigm has been the high cost of failure. If an unsupervised agent goes down the wrong path, the developer pays a “double penalty”: the time to understand what the agent tried to do, and the time to correct its mistakes. However, as models improve, they are crossing a threshold of reliability where this trade-off becomes favorable.

This shift has serious implications for the developer interface. The primary locus of interaction is moving from the IDE to review-centric surfaces. Nick points to Google’s Anti-gravity project, which intentionally abstracts the editor away in its “agent manager mode,” instead presenting summaries, test results, and other artifacts for review.

Put another way, PRs are Replacing the IDE: As agents move toward true autonomy, the editor becomes a secondary interface. The primary surface for supervising AI is shifting toward “agent manager modes” that resemble Pull Requests, where users review high-level artifacts, screen recordings of UI tests, and unit test logs rather than watching a cursor move in real-time.

Redefining Product Defensibility: Data, Brand, and Ambition

In an era where state-of-the-art models are available via API, traditional technical moats are becoming fragile. Nick argues that sustainable competitive advantage now comes from other sources:

Real-World Usage Data: While synthetic data is becoming incredibly powerful, it struggles to replicate the “fuzzy” nuances of human interaction. Capturing subtle user preferences, like when not to show a suggestion, creates a valuable data flywheel that is difficult to synthesize. This data is key for personalization and fine-tuning models on behaviors that matter most to users.

Brand and Reputation: Having a product in the market builds brand recognition, customer trust, and a strong recruiting pipeline for top talent. In a competitive hiring market, a reputation for innovation and a clear mission can be a decisive advantage.

Frontier Intuition: Being first to market with new capabilities gives you an informational edge. You develop a deeper intuition for what users need and where the frontier of possibility is heading, allowing you to anticipate the next wave of features before competitors.

In other words, personalization is the application moat: While foundation model labs dominate general intelligence, application-specific startups have a “prime position” to win on per-user model personalization. By owning the direct relationship with the user, these companies can build feedback loops that tailor model behavior to individual styles in a way that centralized labs cannot replicate.

Economically, the “build vs. buy” decision for models is also evolving. While building your own model can offer cost advantages at scale, it’s nearly impossible for a custom model to keep pace with the raw capability of the next frontier model from a major lab. Successful products will likely use a portfolio of models: routing tasks to the most appropriate one based on cost, speed, and required intelligence.

Key Takeaways

Disrupt Yourself: The pace of model improvement means you must constantly prototype the next version of your product. An idea that is impossible today may be feasible in three months.

Focus on Invariants: Build resilient systems by architecting around the stable, fundamental requirements of a task, not the transient capabilities of a specific model.

The Workflow is Shifting: Developer interaction is moving from real-time “co-driving” in the IDE to asynchronous review of agent-generated pull requests.

Moats are No Longer Purely Technical: Defensibility now lies in proprietary user data, brand reputation, and the intuition that comes from building at the frontier.

Dream Bigger: The increasing capability of AI allows teams to “swag” more ambitious projects. The limiting factor is often the scope of our own imagination, not the technology.