Why Most AI Agents Are Overkill—And When They’re Not

What Eric Colson (ex-Stitch Fix, ex-Netflix) Taught Me About Data Science Failure

Welcome back to Vanishing Gradients! This edition covers some of the biggest challenges in AI today—from agentic system failures to debugging AI applications more effectively.

Here’s what’s inside:

🚨 Why most AI agents fail—and when they actually work

📖 Why "prompt-and-pray" AI is breaking production systems

🛠️ AI coding assistants: customization, debugging, and real workflows

📊 Eric Colson (ex-Stitch Fix, ex-Netflix) on why most data science teams struggle to deliver impact

🔍 Hamel Husain (ex-Airbnb, ex-GitHub) on systematically debugging AI applications

📢 AI events, resources, and major updates from the past week

📖 Reading time: 8-10 minutes

Let’s delve dive in.

🚨 Why Do 99% of Agentic Systems Fail?

Stefan Krawczyk and I just wrapped up teaching Building LLM Applications to 80+ engineers and ML leaders from Meta, Netflix, Ford, the US Air Force, and more.

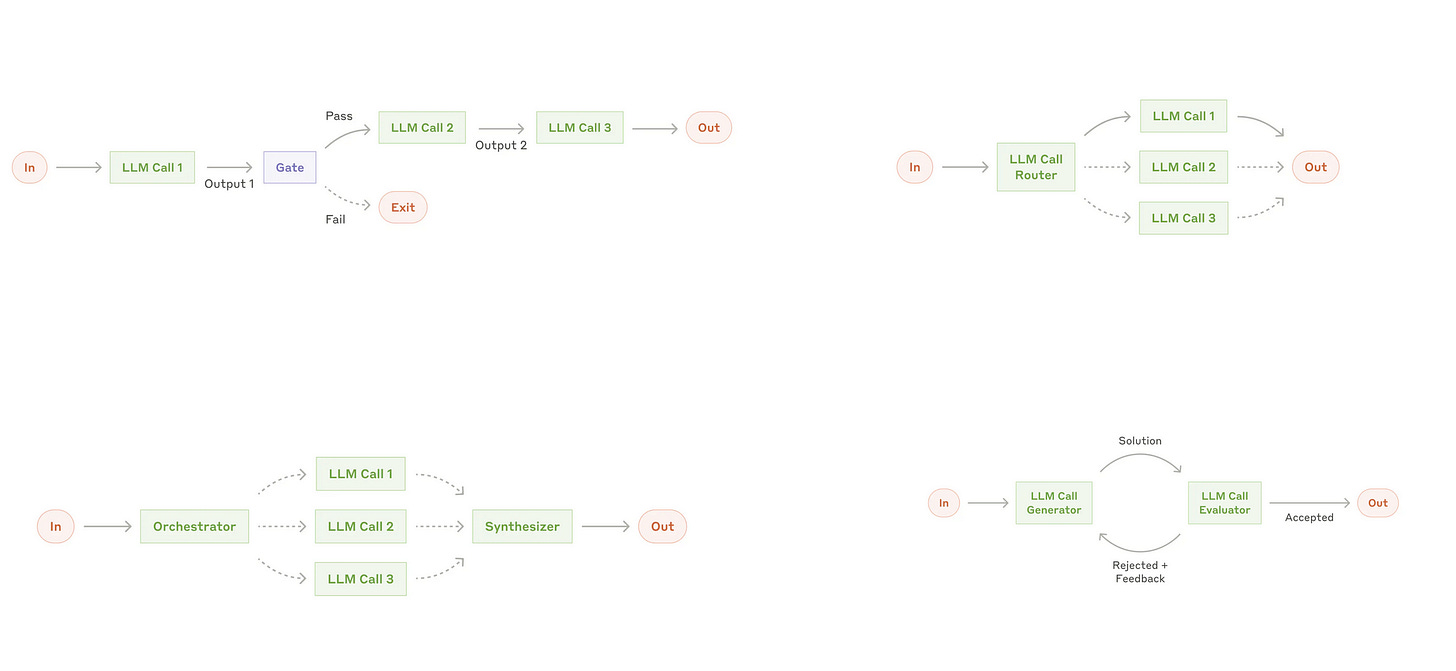

One of the biggest takeaways? Most people don’t need multi-agent systems. They often add:

⚠️ Complexity without clear benefits

🛠️ Debugging nightmares due to unpredictable behavior

🔄 Orchestration overhead that slows development

So, when do agents actually improve your system—and when don’t they? We’re running a free Lightning Lesson to break it down:

✅ When agents actually improve your system—and when they don’t

🛠️ How to structure agentic workflows with tool use & function calling

⚠️ Common pitfalls & debugging strategies

📅 Register here (can’t make it? Register anyway, and we’ll send the recording 🤗) .

🤖 Why "Prompt-and-Pray" AI Doesn't Work

One of the biggest anti-patterns in AI implementations today is the "prompt-and-pray" approach—where business logic is embedded entirely in prompts, and teams hope that LLMs will figure things out at runtime. This is fragile, costly, and impossible to scale reliably.

In a new piece for O'Reilly, Alan Nichol and I break down:

✅ Why relying on LLMs for critical decisions leads to unreliable and inefficient systems

✅ How separating business logic from conversational ability creates more maintainable AI

✅ The structured automation approach that leading AI teams are using to build scalable, robust systems

👉 Read the full essay here: Beyond Prompt-and-Pray -- Building Reliable LLM-Powered Software in an Agentic World

Curious—have you seen "prompt-and-pray" in action? What approaches have worked better for you? Reply and let me know!

🛠 AI Coding Assistants: Who’s Really in Control?

AI coding assistants are changing how developers write, debug, and maintain code—but who’s in the driver’s seat?

In the latest episode of Vanishing Gradients, I sat down with Ty Dunn, CEO and co-founder of Continue, an open-source AI-powered coding assistant designed to give developers more flexibility and control over their workflows.

🚀 Watch the Live Demo

In this clip, Ty swaps between AI models (Claude, DeepSeek, etc.) in real time, runs models locally with Ollama, and modifies the AI’s reasoning process—all from within his coding environment.

What We Covered:

💡 Why open-source AI assistants might be the future

🧩 Modular AI workflows—how developers can swap models like Claude, DeepSeek, and Llama in real time

⚙️ Customization vs. sensible defaults—balancing ease of use with deeper configuration

🔍 Fine-tuning models on personal and team-level data for better AI-powered coding🚀 The evolution of AI coding—from autocomplete to intelligent assistants

⏱ Some topics we covered:

If you’ve ever felt locked into a black-box AI assistant or wondered what’s next for developer-first AI tools, this episode is for you.

👉 Check it out and let me know—how do you see AI shaping the future of coding?

Full Episode & Discussion

🎙️ Podcast Episode | 📺 Livestream Replay

📊 Why 90% of Data Science Projects Fail—And How to Fix It

Most companies invest in data science, but few actually turn it into business impact. Why? In the latest episode of High Signal, I spoke with Eric Colson—DS/ML advisor, former Chief Algorithms Officer at Stitch Fix and VP of Data Science at Netflix—about why companies struggle to make data science work at scale.

🚀 Watch the Clip

In this clip, Eric explains why most companies fail to leverage data science effectively—and what gets left on the table when ideas only flow from business teams.

What We Covered:

⚡ Why most data science projects fail to deliver real business value

🔄 How to structure teams so data science shapes business strategy—not just answers questions

📉 Why companies avoid experimentation—and why that’s a mistake

🤖 How trial-and-error in data science leads to better decisions and bigger wins🎧

Listen to the Full Episode:

🎙️ Spotify: Listen here

🍏 Apple Podcasts: Listen here

📄 Show Notes & More: Read here

High Signal is produced by Delphina, with Duncan Gilchrist and Jeremy Hermann, and helps you navigate AI, data science, and machine learning while advancing your career.

👉 Check it out—what’s the biggest challenge you see in making data science work inside companies?

🔍 Trusting AI: Why Error Analysis Matters

“For any AI application, you need to look at your data in order to trust it.” – Hamel Husain

Most teams deploying LLM-powered software struggle with understanding errors, debugging failures, and building trustworthy AI systems. So, how do you actually do it? In a 90-minute livestream, Hamel Husain and I tackled error analysis and failure modes in AI applications—covering everything from debugging workflows to using meta-prompts and synthetic data for evaluation.

🎬 Watch this clip where Hamel explains why trusting AI starts with understanding your data.

What We Covered:

🔍 How to systematically analyze errors in LLM-powered software

📊 Using pivot tables, clustering, and faceting for debugging

🛠️ Why misleading dashboards lead to bad decisions

⚙️ Evaluating meta-prompts and synthetic data to improve reliability

🔄 How fine-tuning and data flywheels impact long-term performance

⏱ Some topics we covered:

You can watch the livestream here.

The full conversation drops as a podcast soon—stay tuned! 👉 What’s the biggest challenge you face in debugging AI systems?

📢 More AI & ML Updates

A few things happening in AI this week—from live events to deep dives and free courses:

🎙️ Upcoming: Outerbounds Fireside Chat w/ Ramp

I’m sitting down with Ryan Stevens (Director of Applied Science at Ramp, ex-Meta) to discuss how one of the fastest-growing fintech companies builds ML systems that move money, fight fraud, and scale with the business.

📅 Feb 19 | 2 PM PST – Register here for the livestream

📚 Resource: Sebastian Raschka on Reasoning Models

AI is moving toward more specialized models—and Sebastian Raschka has a great breakdown on the four main approaches to building reasoning LLMs. His latest article covers:

🔹 How LLMs are evolving beyond general-purpose tasks

🔹 Trade-offs in reasoning models like DeepSeek R1

🔹 Tips for developing LLMs with reasoning capabilities

📂 Last Week in AI: What Actually Mattered

It wasn’t a slow week in AI—so I put together a recap doc of the most important drops:

🔍 OpenAI Deep Research – AI that synthesizes and structures findings, not just retrieves them.

💻 GitHub Copilot Agents – A step toward autonomous software engineering assistants.

🚀 Mistral Le Chat – 10x faster than ChatGPT-4, pushing 1,100 tokens/sec.

📑 Grab the full doc here

🤖 Join Me in the Hugging Face AI Agents Course

I’m starting the free Hugging Face AI Agents course, and if you’re building with agentic AI, you should too. Let’s go through it together!

📌 Sign up here

👉 Which of these updates interests you most? Reply and let me know!

That’s it for this edition of Vanishing Gradients.

If something stood out to you, reply and let me know—whether you’ve been experimenting with agentic AI, refining LLM debugging workflows, or keeping up with the latest AI research.

And if you found this useful, share it with a friend or colleague who might enjoy it.

Until next time,

Hugo